CS224W - Colab 2

CS224W - Colab 2

In Colab 2, we will work to construct our own graph neural network using PyTorch Geometric (PyG) and then apply that model on two Open Graph Benchmark (OGB) datasets. These two datasets will be used to benchmark your model’s performance on two different graph-based tasks: 1) node property prediction, predicting properties of single nodes and 2) graph property prediction, predicting properties of entire graphs or subgraphs.

First, we will learn how PyTorch Geometric stores graphs as PyTorch tensors.

Then, we will load and inspect one of the Open Graph Benchmark (OGB) datasets by using the ogb package. OGB is a collection of realistic, large-scale, and diverse benchmark datasets for machine learning on graphs. The ogb package not only provides data loaders for each dataset but also model evaluators.

Lastly, we will build our own graph neural network using PyTorch Geometric. We will then train and evaluate our model on the OGB node property prediction and graph property prediction tasks.

Note: Make sure to sequentially run all the cells in each section, so that the intermediate variables / packages will carry over to the next cell

We recommend you save a copy of this colab in your drive so you don’t lose progress!

The expected time to finish this Colab is 2 hours. However, debugging training loops can easily take a while. So, don’t worry at all if it takes you longer! Have fun and good luck on Colab 2 :)

Device

You might need to use a GPU for this Colab to run quickly.

Please click Runtime and then Change runtime type. Then set the hardware accelerator to GPU.

Setup

As discussed in Colab 0, the installation of PyG on Colab can be a little bit tricky. First let us check which version of PyTorch you are running

1 | import torch |

PyTorch has version 2.4.1

Download the necessary packages for PyG. Make sure that your version of torch matches the output from the cell above. In case of any issues, more information can be found on the PyG’s installation page.

1 | # Install torch geometric |

1) PyTorch Geometric (Datasets and Data)

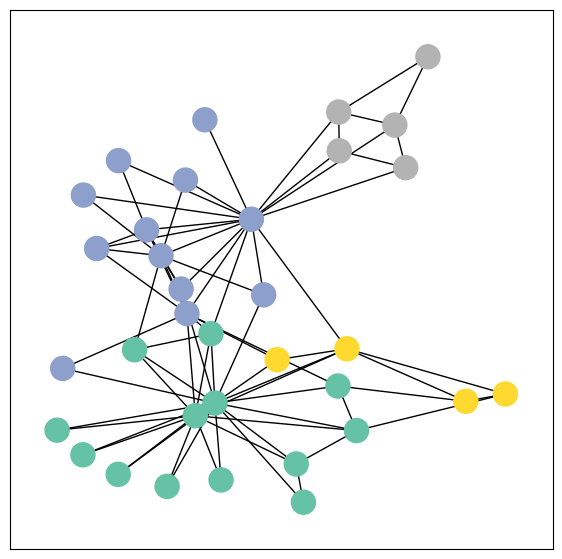

PyTorch Geometric has two classes for storing and/or transforming graphs into tensor format. One is torch_geometric.datasets, which contains a variety of common graph datasets. Another is torch_geometric.data, which provides the data handling of graphs in PyTorch tensors.

In this section, we will learn how to use torch_geometric.datasets and torch_geometric.data together.

PyG Datasets

The torch_geometric.datasets class has many common graph datasets. Here we will explore its usage through one example dataset.

1 | from torch_geometric.datasets import TUDataset |

ENZYMES(600)

Question 1: What is the number of classes and number of features in the ENZYMES dataset? (5 points)

1 | def get_num_classes(pyg_dataset): |

ENZYMES dataset has 6 classes

ENZYMES dataset has 3 features

PyG Data

Each PyG dataset stores a list of torch_geometric.data.Data objects, where each torch_geometric.data.Data object represents a graph. We can easily get the Data object by indexing into the dataset.

For more information such as what is stored in the Data object, please refer to the documentation.

Question 2: What is the label of the graph with index 100 in the ENZYMES dataset? (5 points)

1 | def get_graph_class(pyg_dataset, idx): |

Data(edge_index=[2, 168], x=[37, 3], y=[1])

Graph with index 100 has label 4

Question 3: How many edges does the graph with index 200 have? (5 points)

1 | def get_graph_num_edges(pyg_dataset, idx): |

Graph with index 200 has 53 edges

2) Open Graph Benchmark (OGB)

The Open Graph Benchmark (OGB) is a collection of realistic, large-scale, and diverse benchmark datasets for machine learning on graphs. Its datasets are automatically downloaded, processed, and split using the OGB Data Loader. The model performance can then be evaluated by using the OGB Evaluator in a unified manner.

Dataset and Data

OGB also supports PyG dataset and data classes. Here we take a look on the ogbn-arxiv dataset.

1 | import torch_geometric.transforms as T |

The ogbn-arxiv dataset has 1 graph

Data(num_nodes=169343, x=[169343, 128], node_year=[169343, 1], y=[169343, 1], adj_t=[169343, 169343, nnz=1166243])

/opt/anaconda3/envs/graph/lib/python3.11/site-packages/ogb/nodeproppred/dataset_pyg.py:69: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

self.data, self.slices = torch.load(self.processed_paths[0])

Question 4: How many features are in the ogbn-arxiv graph? (5 points)

1 | def graph_num_features(data): |

The graph has 128 features

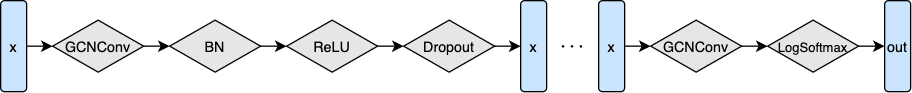

3) GNN: Node Property Prediction

In this section we will build our first graph neural network using PyTorch Geometric. Then we will apply it to the task of node property prediction (node classification).

Specifically, we will use GCN as the foundation for your graph neural network (Kipf et al. (2017)). To do so, we will work with PyG’s built-in GCNConv layer.

Setup

1 | import torch |

2.4.1

Load and Preprocess the Dataset

1 | if 'IS_GRADESCOPE_ENV' not in os.environ: |

Device: cpu

GCN Model

Now we will implement our GCN model!

Please follow the figure below to implement the forward function.

参考:

https://pytorch-geometric.readthedocs.io/en/latest/modules/nn.html#torch_geometric.nn.conv.GCNConv

https://pytorch.org/docs/stable/generated/torch.nn.BatchNorm1d.html

https://pytorch.org/docs/stable/nn.functional.html

1 | import torch |

1 | def train(model, data, train_idx, optimizer, loss_fn): |

1 | # Test function here |

1 | # Please do not change the args |

1 | if 'IS_GRADESCOPE_ENV' not in os.environ: |

1 | # Please do not change these args |

Epoch: 01, Loss: 4.2264, Train: 19.30%, Valid: 25.86% Test: 23.30%

Epoch: 02, Loss: 2.3266, Train: 22.93%, Valid: 22.20% Test: 27.58%

Epoch: 03, Loss: 1.9266, Train: 29.55%, Valid: 26.12% Test: 31.58%

Epoch: 04, Loss: 1.7227, Train: 32.78%, Valid: 33.06% Test: 35.57%

Epoch: 05, Loss: 1.6185, Train: 35.70%, Valid: 32.67% Test: 33.84%

Epoch: 06, Loss: 1.5277, Train: 39.24%, Valid: 35.68% Test: 35.93%

Epoch: 07, Loss: 1.4655, Train: 43.58%, Valid: 43.85% Test: 45.12%

Epoch: 08, Loss: 1.4239, Train: 47.11%, Valid: 49.65% Test: 51.94%

Epoch: 09, Loss: 1.3798, Train: 47.55%, Valid: 49.59% Test: 52.15%

Epoch: 10, Loss: 1.3428, Train: 47.21%, Valid: 48.50% Test: 51.24%

...

Epoch: 95, Loss: 0.9132, Train: 73.62%, Valid: 71.05% Test: 69.49%

Epoch: 96, Loss: 0.9135, Train: 73.67%, Valid: 71.52% Test: 70.41%

Epoch: 97, Loss: 0.9091, Train: 73.74%, Valid: 71.47% Test: 69.89%

Epoch: 98, Loss: 0.9096, Train: 73.82%, Valid: 71.75% Test: 70.59%

Epoch: 99, Loss: 0.9087, Train: 73.90%, Valid: 71.91% Test: 71.12%

Epoch: 100, Loss: 0.9057, Train: 74.07%, Valid: 71.67% Test: 70.79%

Question 5: What are your best_model validation and test accuracies?(20 points)

Run the cell below to see the results of your best of model and save your model’s predictions to a file named ogbn-arxiv_node.csv. You can view this file by clicking on the Folder icon on the left side pannel. Report the results on Gradescope.

1 | if 'IS_GRADESCOPE_ENV' not in os.environ: |

Saving Model Predictions

Best model: Train: 73.90%, Valid: 71.91% Test: 71.12%

4) GNN: Graph Property Prediction

In this section we will create a graph neural network for graph property prediction (graph classification).

Load and preprocess the dataset

1 | from ogb.graphproppred import PygGraphPropPredDataset, Evaluator |

Device: cpu

Task type: binary classification

1 | # Load the dataset splits into corresponding dataloaders |

1 | if 'IS_GRADESCOPE_ENV' not in os.environ: |

Graph Prediction Model

Graph Mini-Batching

Before diving into the actual model, we introduce the concept of mini-batching with graphs. In order to parallelize the processing of a mini-batch of graphs, PyG combines the graphs into a single disconnected graph data object (torch_geometric.data.Batch). torch_geometric.data.Batch inherits from torch_geometric.data.Data (introduced earlier) and contains an additional attribute called batch.

The batch attribute is a vector mapping each node to the index of its corresponding graph within the mini-batch:

batch = [0, ..., 0, 1, ..., n - 2, n - 1, ..., n - 1]

This attribute is crucial for associating which graph each node belongs to and can be used to e.g. average the node embeddings for each graph individually to compute graph level embeddings.

Implemention

Now, we have all of the tools to implement a GCN Graph Prediction model!

We will reuse the existing GCN model to generate node_embeddings and then use Global Pooling over the nodes to create graph level embeddings that can be used to predict properties for the each graph. Remeber that the batch attribute will be essential for performining Global Pooling over our mini-batch of graphs.

参考:

https://pytorch-geometric.readthedocs.io/en/latest/modules/nn.html#global-pooling-layers

1 | from ogb.graphproppred.mol_encoder import AtomEncoder |

1 | def train(model, device, data_loader, optimizer, loss_fn): |

1 | # The evaluation function |

1 | if 'IS_GRADESCOPE_ENV' not in os.environ: |

1 | # Please do not change these args |

Training...

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Evaluating...

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Epoch: 01, Loss: 0.1584, Train: 68.88%, Valid: 62.36% Test: 62.30%

Training...

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Evaluating...

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Epoch: 02, Loss: 0.1501, Train: 72.18%, Valid: 74.67% Test: 70.18%

...

Epoch: 29, Loss: 0.1252, Train: 84.55%, Valid: 77.83% Test: 74.29%

Training...

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Evaluating...

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Epoch: 30, Loss: 0.1249, Train: 84.06%, Valid: 78.64% Test: 75.13%

Question 6: What are your best_model validation and test ROC-AUC scores? (20 points)

Run the cell below to see the results of your best of model and save your model’s predictions in files named ogbg-molhiv_graph_[valid,test].csv. Again, you can view the files by clicking on the Folder icon on the left side pannel. Report the results on Gradescope.

1 | if 'IS_GRADESCOPE_ENV' not in os.environ: |

Iteration: 0%| | 0/1029 [00:00<?, ?it/s]

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Saving Model Predictions

Iteration: 0%| | 0/129 [00:00<?, ?it/s]

Saving Model Predictions

Best model: Train: 81.89%, Valid: 80.14% Test: 74.33%

Question 7 (Optional): Experiment with the two other global pooling layers in Pytorch Geometric.

Submission

To submit Colab 2, please submit to the following assignments on Gradescope:

- “Colab 2”: submit your answers to the questions in this assignment

- “Colab 2 Code”: submit your completed CS224W_Colab_2.ipynb. From the “File” menu select “Download .ipynb” to save a local copy of your completed Colab. PLEASE DO NOT CHANGE THE NAME! The autograder depends on the .ipynb file being called “CS224W_Colab_2.ipynb”.

Clarrification:

- In “Colab 2 Code”, we grade Q1-Q4 (non-training questions) using autograder.

- In “Colab 2”, we grade Q5-Q6 (training questions), where Q1-Q4 are assigned 0 points.